Getting Started

Installation

To install AlphaCube, open a terminal and run the following command:

pip install -U alphacube

Usage

Basic

Using AlphaCube in Python is simple. The first time alphacube.load() is called, the required model data will be downloaded and cached.

import alphacube

# Load a pre-trained model

# Defaults to "small" on CPU, "large" on GPU

alphacube.load()

# Solve the cube using a given scramble

result = alphacube.solve(

scramble="D U F2 L2 U' B2 F2 D L2 U R' F' D R' F' U L D' F' D R2",

beam_width=1024,

)

print(result)

Output{

'solutions': [

"D L D2 R' U2 D B' D' U2 B U2 B' U' B2 D B2 D' B2 F2 U2 F2"

],

'num_nodes': 19744, # Total search nodes explored

'time': 1.4068585219999659 # Time in seconds

}

Improving Solution Quality

If you want shorter solutions, increase the beam_width parameter.

This makes the search more exhaustive at the cost of more computation time.

result = alphacube.solve(

scramble="D U F2 L2 U' B2 F2 D L2 U R' F' D R' F' U L D' F' D R2",

beam_width=65536,

)

print(result)

Output{

'solutions': [

"D' R' D2 F' L2 F' U B F D L D' L B D2 R2 F2 R2 F'",

"D2 L2 R' D' B D2 B' D B2 R2 U2 L' U L' D' U2 R' F2 R'"

],

'num_nodes': 968984,

'time': 45.690575091997744

}

Allowing A Few Extra Moves

You can obtain not just the shortest solutions but also slightly longer ones, using the extra_depths parameter.

This instructs the solver to continue searching after the first solution is found.

alphacube.load() # model_id="small"

result = alphacube.solve(

scramble="D U F2 L2 U' B2 F2 D L2 U R' F' D R' F' U L D' F' D R2",

beam_width=65536,

extra_depths=1

)

print(result)

Output{

'solutions': [

"D' R' D2 F' L2 F' U B F D L D' L B D2 R2 F2 R2 F'",

"D2 L2 R' D' B D2 B' D B2 R2 U2 L' U L' D' U2 R' F2 R'",

"D R F2 L' U2 R2 U2 R2 B2 U' F B2 D' F' D' R2 F2 U F2 L2", # extra

"L' D' R' D2 L B' U F2 U R' U' F B' R2 B R B2 F D2 B", # extra

"R' F L2 D R2 U' B' L' U2 F2 U L U B2 U2 R2 D' U B2 R2", # extra

"L' U' F' R' U D B2 L' B' R' B U2 B2 L2 D' R2 U' D R2 U2" # extra

],

'num_nodes': 1100056,

'time': 92.809575091997744

}

GPU Acceleration

If you have a compatible GPU or Mac, you can get a significant speedup by loading a large model.

alphacube.load(model_id="large")

result = alphacube.solve(

scramble="D U F2 L2 U' B2 F2 D L2 U R' F' D R' F' U L D' F' D R2",

beam_width=65536,

)

print(result)

Output{

'solutions': ["D F L' F' U2 B2 U F' L R2 B2 U D' F2 U2 R D'"],

'num_nodes': 903448,

'time': 20.46845487099995

}

Applying Ergonomic Bias

To find solutions that are easier to execute manually, provide an ergonomic_bias dictionary to the solve method.

Each move is assigned a score, where higher scores indicate more desirable moves. This also enables wide moves like u and r.

ergonomic_bias = {

"U": 0.9, "U'": 0.9, "U2": 0.8,

"R": 0.8, "R'": 0.8, "R2": 0.75,

"L": 0.55, "L'": 0.4, "L2": 0.3,

"F": 0.7, "F'": 0.6, "F2": 0.6,

"D": 0.3, "D'": 0.3, "D2": 0.2,

"B": 0.05, "B'": 0.05, "B2": 0.01,

"u": 0.45, "u'": 0.45, "u2": 0.4,

"r": 0.3, "r'": 0.3, "r2": 0.25,

"l": 0.2, "l'": 0.2, "l2": 0.15,

"f": 0.35, "f'": 0.3, "f2": 0.25,

"d": 0.15, "d'": 0.15, "d2": 0.1,

"b": 0.03, "b'": 0.03, "b2": 0.01

}

result = alphacube.solve(

scramble="D U F2 L2 U' B2 F2 D L2 U R' F' D R' F' U L D' F' D R2",

beam_width=65536,

ergonomic_bias=ergonomic_bias

)

print(result)

Output{

'solutions': [

"u' U' f' R2 U2 R' L' F' R D2 f2 R2 U2 R U L' U R L",

"u' U' f' R2 U2 R' L' F' R D2 f2 R2 U2 R d F' U f F",

"u' U' f' R2 U2 R' L' F' R u2 F2 R2 D2 R u f' l u U"

],

'num_nodes': 1078054,

'time': 56.13087955299852

}

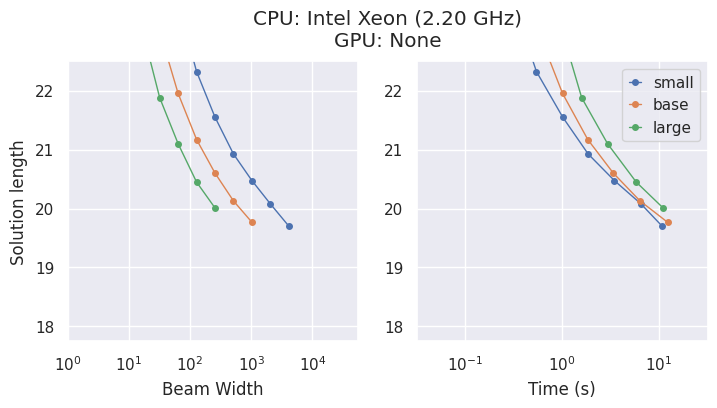

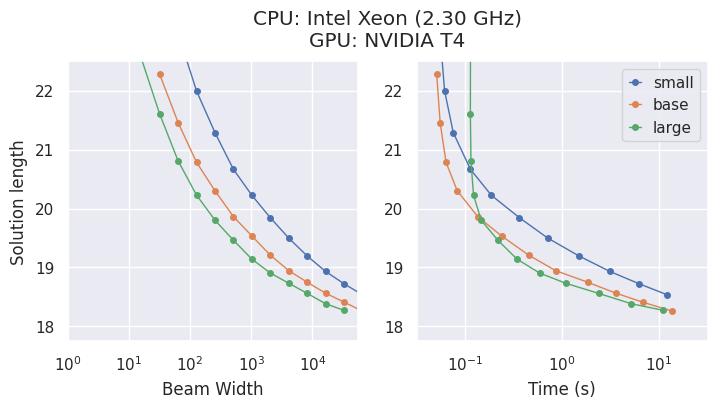

Models & Trade-Off

AlphaCube offers three compute-optimally trained models from the original paper: "small", "base", and "large".

While larger models are more accurate, the best choice depends on your hardware.

- On a CPU, the

"small"model is often the most time-efficient choice. - On a GPU, the

"large"model exhibits the highest performance and finds the best solutions in the shortest time.

CLI Option

If you prefer using alphacube from the command line, you can do so:

alphacube \

--model_id large \

--scramble "F U2 L2 B2 F U L2 U R2 D2 L' B L2 B' R2 U2" \

--beam_width 100000 \

--extra_depths 3 \

--verbose

With abbreviated flags,

alphacube \

-m large \

-s "F U2 L2 B2 F U L2 U R2 D2 L' B L2 B' R2 U2" \

-bw 100000 \

-ex 3 \

-v

Please find more details at API Reference > CLI.

Note: The CLI loads the specified model for every execution, making it best suited for single-use commands rather than repeated solving in a script.